It is exciting to learn deep learning. The courses and the results are exciting and you can see it in your machine using popular libraries like Keras for Python and DeepLearning4j for Java.

These libraries even have utility methods that will download famous dataset for you, you just have to copy and paste the code and run it, after training (probably a few hours on a CPU) you will have a model that is trained and ready to classify new data.

I did that. I did that a lot of times. I also created small neural nets from scratch, but still, I was missing solving a problem with this technology and collect my own dataset.

In this post I will share how I used DeepLearning4J to classify brazilian coins. I will try also to share how I failed a lot before getting my current 77% accuracy model.

We need a large dataset to train a neural network. They say that at least 1k images per classes. I started much less than that, about 50 images per class and I got it from google images. Of course the training did very bad so I collected more images.

Take pictures of coin is not the most pleasant task in the world, so I asked for my wife and co workers help. I even created a small application so people could send me images more easily. Today I have 200 images per class in my training set and I separated it in directories, where the parent directory is the label for these images. With this approach I case use the DeepLearning4j classes I already mentioned on this blog.

I also have about 40 images per class in my test set. I resized all images to be of size 448x448 - I found no neural network trained with images larger than that, and I don't want to explode my file system with large photos files took from my mobile camera (~3mb per photo)

When I was collecting the data set I was also making some testing and trying to build a good model and I also used the DL4J library to generate images for me. Since my dataset was so small I can use DL4J to apply some sort of transformation (crop, resize, rotate, etc) to my dataset generating images based on the originals so I can have more data to train my neural network.

I will try to cover these 3 applications in this blog!

We should also consider that we are living in amazing times where AI is becoming an important part of our lives - but the lack of public datasets may not be a good thing - who owns the data owns the future and I hope to create more public datasets so people can create their own application.

These libraries even have utility methods that will download famous dataset for you, you just have to copy and paste the code and run it, after training (probably a few hours on a CPU) you will have a model that is trained and ready to classify new data.

I did that. I did that a lot of times. I also created small neural nets from scratch, but still, I was missing solving a problem with this technology and collect my own dataset.

In this post I will share how I used DeepLearning4J to classify brazilian coins. I will try also to share how I failed a lot before getting my current 77% accuracy model.

|

| Brazilian coin for one real |

Collecting the data set

We need a large dataset to train a neural network. They say that at least 1k images per classes. I started much less than that, about 50 images per class and I got it from google images. Of course the training did very bad so I collected more images.

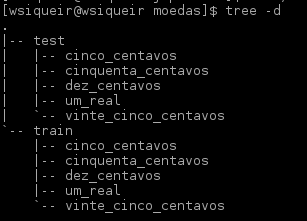

Take pictures of coin is not the most pleasant task in the world, so I asked for my wife and co workers help. I even created a small application so people could send me images more easily. Today I have 200 images per class in my training set and I separated it in directories, where the parent directory is the label for these images. With this approach I case use the DeepLearning4j classes I already mentioned on this blog.

I also have about 40 images per class in my test set. I resized all images to be of size 448x448 - I found no neural network trained with images larger than that, and I don't want to explode my file system with large photos files took from my mobile camera (~3mb per photo)

When I was collecting the data set I was also making some testing and trying to build a good model and I also used the DL4J library to generate images for me. Since my dataset was so small I can use DL4J to apply some sort of transformation (crop, resize, rotate, etc) to my dataset generating images based on the originals so I can have more data to train my neural network.

Training a neural network

First I started trying to create my own neural networks and train it from scratch (with random weights). I choose Convolutional Neural Networks (CNN) to identify patterns in a coin to classsify it - a few classifical CNN layers and a full connected layer just as I learned on the internet.

That was a bad idea: training was taking too long I never got a good result with accuracy greater than 50. So I took some good neural networks already available in the internet and wrote using DeepLearning4j (DL4J).

That was a bad idea: training was taking too long I never got a good result with accuracy greater than 50. So I took some good neural networks already available in the internet and wrote using DeepLearning4j (DL4J).

Well, DL4J examples and its documentation is full of good examples and it even includes examples for famous networks, such as VGG16.

|

| Convolutional Neural Netowkrs. Source: https://www.topbots.com/14-design-patterns-improve-convolutional-neural-network-cnn-architecture/ |

After grabbing the neural network code in the internet I noticed that the training was taking too long and no good result was coming out from my training sections. In another words, hours waiting for a result that was far away from what I expect. This was the good time to delete my project and forget about it. It was clear that it would take time to get a good dataset and also experience to know how to correctly choose the hyper-parameters for my neural network (it would require more and more time with the dataset and more inspection of the neural network).

Using pre-trained models with DeepLearning4j

DL4J 0.9 comes with great APIs to use pre-trained neural networks. Using the Model Zoo API you can get a fresh known neural network architecture (VGG16, Resnet50, GoogleLetNet, etc) and train it against your data or get a pre-trained model. There are CNN models that were trained against ImageNet, in another words, you can get what the neural network already learned from ImageNet and use it to classify your own images!

Once you get a model from the zoo you can use the Transfer Learning API to replace the last layer to a layer that has the same number of output as the number of labels you have in your dataset (see the code in the next section of this article).

There are a few architectures that you can choose. I choose ResNet50 initialized with ImageNet weights. I would choose GoogleLetNet or VGG16, but ResNet50 model size is 90mb only, VGG16, for example, is 500mb! In my first test, with a few images, I got 70% of accuracy, that was amazing!

Following everything we discussed this is what happens in the code

1) Loaded the data set and used the parent directory as the label for the images contained in this directory

2) Got the Resnet50 model from the zoo which was has weights already adjust over thousand of images from ImageNet dataset -it means that knows a lot of patterns from images already and I used the learning transfer API to replace the last layer only with a new Dense Layer that contains 5 outputs (the number of classes I have in my dataset)

Once I run this code I could see the number of weights that needed to be updated. The other layers were frozen.

3) Trained the model using the original dataset and transformed images, see:

4) Finally evaluated the model and exported it to the disk. In my real application I can simply load and use it to predict the label for new images

With my last training I had 79 % of accuracy - I consider it a good result so it is time to build stuff using it!

The full code can be found in my github. It is not using the best Java code practices, but the goal here is to export the model to be used in real applications.

The model is still far from what I wanted (85% of accuracy), but we can already create interesting applications:

* An offline mobile free application to help blind people identify its coins;

* A telegram bot that receives the image and return the classification;

* An app on your mobile to automatically count the coin values from a picture - return how much we have in that picture, so no human counting coins;

Once you get a model from the zoo you can use the Transfer Learning API to replace the last layer to a layer that has the same number of output as the number of labels you have in your dataset (see the code in the next section of this article).

There are a few architectures that you can choose. I choose ResNet50 initialized with ImageNet weights. I would choose GoogleLetNet or VGG16, but ResNet50 model size is 90mb only, VGG16, for example, is 500mb! In my first test, with a few images, I got 70% of accuracy, that was amazing!

The code

Following everything we discussed this is what happens in the code

1) Loaded the data set and used the parent directory as the label for the images contained in this directory

2) Got the Resnet50 model from the zoo which was has weights already adjust over thousand of images from ImageNet dataset -it means that knows a lot of patterns from images already and I used the learning transfer API to replace the last layer only with a new Dense Layer that contains 5 outputs (the number of classes I have in my dataset)

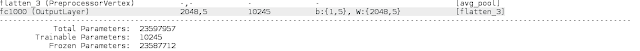

Once I run this code I could see the number of weights that needed to be updated. The other layers were frozen.

|

| The Resnet50 layers were frozen except the last layer, which was added by us |

3) Trained the model using the original dataset and transformed images, see:

4) Finally evaluated the model and exported it to the disk. In my real application I can simply load and use it to predict the label for new images

With my last training I had 79 % of accuracy - I consider it a good result so it is time to build stuff using it!

|

| The results of this adventure |

Applications

The model is still far from what I wanted (85% of accuracy), but we can already create interesting applications:

* An offline mobile free application to help blind people identify its coins;

* A telegram bot that receives the image and return the classification;

* An app on your mobile to automatically count the coin values from a picture - return how much we have in that picture, so no human counting coins;

I will try to cover these 3 applications in this blog!

We should also consider that we are living in amazing times where AI is becoming an important part of our lives - but the lack of public datasets may not be a good thing - who owns the data owns the future and I hope to create more public datasets so people can create their own application.

Comentários

Postar um comentário